Mike Helland

Philosopher

- Joined

- Nov 29, 2020

- Messages

- 5,244

I have a couple of questions about that paper.

In section 4, the paragraph bracketed by equations (10) and (11) makes three references to proper distance. Consulting a Wikipedia article for definitions of the various distance measures, it looks to me as though all three of that paragraph's references to proper distances should have referred to comoving distances. It seems to me that is a matter of some importance, because (in an expanding universe, in the context of that paragraph) proper distances differ from comoving distances by a factor of 1+z. Can you comment on this?

In a simply expanding space, comoving distance is:

http://latex.codecogs.com/gif.latex?d_C = z \frac{c}{H_0}

Proper distance is:

http://latex.codecogs.com/gif.latex?d_p = \frac{d_C}{1+z}

And luminosity distance is:

http://latex.codecogs.com/gif.latex?d_L = d_C(1+z) = d_p(1+z)^2

The author seems to have that straight, unless I'm missing something.

ETA, read it a couple more times. When he says "the proper distance has expanded by a factor of (1+z)", he's referring to the proper distance now, aka comoving distance.

I'd also be interested to hear your comments with regard to Figure 3 and its caption, whose concluding sentence says "The predictions of the expanding and nonexpanding universes are presented as the solid and dashed lines, respectively." From the figure, it is rather obvious that the solid line (which is said to be the prediction for an expanding universe) fits the data better than the dashed line (which is said to be the prediction for a nonexpanding universe)

Not sure. It looks mislabeled.

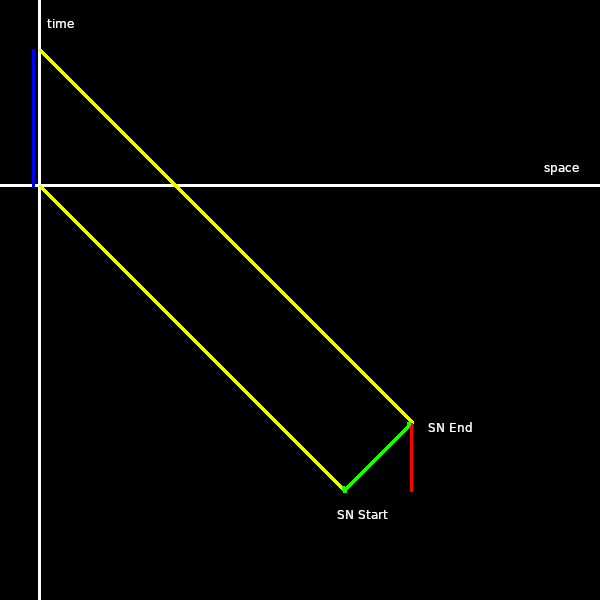

This is one of the big muddy spots I've brought up before.

In the expanding model, luminosity distance is:

http://latex.codecogs.com/gif.latex?d_L = d_C(1+z) = d_p(1+z)^2

In my non-expanding model it's based on the light travel time distance:

http://latex.codecogs.com/gif.latex?d_L = d_t(1+z)

What the author's non-expanding model is, I'm still figuring out.

It all depends on the "d" used in the flux-luminosity-distance relationship.

The question I have for my model is, what effect, if any, does time dilation have on angular size. I'm not sure on that right now.

Last edited: